As the United States moves into the twenty-first century in a world of diverse dangers and threats marked by the proliferation of weapons of mass destruction, unconventional warfare, and sophisticated enemy countermeasures, surveillance and reconnaissance are not only critical but essential for achieving the "high ground" in information dominance, conflict management, and war fighting. As defined by the Joint Chiefs of Staff (JCS), surveillance is the "systematic observation of aerospace, surface or subsurface areas, places, persons, or things, by visual, aural, electronic, photographic, or other means."1 Similarly, reconnaissance refers to "a mission undertaken to obtain, by visual observation or other detection methods, information about the activities and resources of an enemy or potential enemy."2 Both surveillance and reconnaissance are critical to US security objectives of maintaining national and regional stability and preventing unwanted aggression around the world.

Key to achieving information dominance will be the gradual evolution of technology (i.e., sensor development, computation power, and miniaturization) to provide a continuous, real-time picture of the battle space to war fighters and commanders at all levels. Advances in surveillance and reconnaissance-particularly real-time "sensor-to-shooter" capability to support "one shot, one kill" technology-will be a necessity if future conflicts are to be supported by a society conditioned to "quick wars" with high operational tempos, minimal casualties, and low collateral damage.

The rigorous information demands of the war fighter, commander, and national command authorities (NCA) in the year 2020 will require a system and architecture to provide a high-resolution "picture" of objects in space, in the air, on the surface, and below the surface-be they concealed, mobile or stationary, animate or inanimate. The true challenge is not only to collect information on objects with much greater fidelity than is possible today, but also to process the information orders of magnitude faster and disseminate it instantly in the desired format.

The Key to the Concept: Structural Sensory Signatures

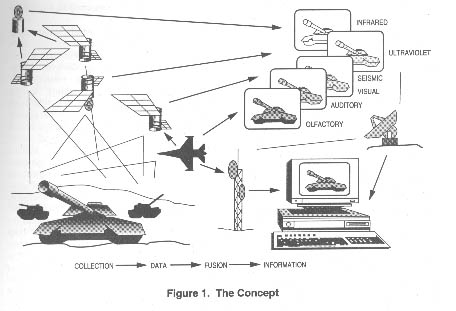

The critical concept of this article is to develop an omnisensorial capability that includes all forms of inputs from the sensory continuum . This new term seeks to expand our present exploration of the electromagnetic spectrum to encompass the "exotic" sensing technologies proposed in this article. This system will collect and fuse data from all sensory inputs-optical, olfactory, gustatory, infrared (IR), multispectral, tactile, acoustical, laser radar, millimeter wave radar, X ray, DNA patterns, human intelligence (HUMINT)-to identify objects (buildings, airborne aircraft, people, and so forth ) by comparing their structural sensory signatures (SSS) against a preloaded database in order to identify matches or changes in structure. The identification aspect has obvious military advantages in the processes of indications and warning, target identification and classification, and combat assessment.

An example of how this technique might actually develop involves establishing a sensory baseline for certain specific objects and structures. The system would optically scan a known source-such as an aircraft or building full of nuclear or command, control, communications, computers, and intelligence (C4I) equipment-from all angles and then smell; listen to; feel; measure density, IR emissions, light emissions, heat emissions, sound emissions, propulsion emissions, air-displacement patterns in the atmosphere, and so forth; and synthesize that information into a sensory signature of that structure. This map would then be compared to sensory signature patterns of target subjects such as Scud launchers or even people. A simple but effective example of a sensory signature was discovered by the Soviets during the height of the cold war. They discovered that the neutrons given off by nuclear warheads in our weapons-storage areas interacted with the sodium arc lights surrounding the area, creating a detectable effect. This simple discovery allowed them to determine whether a storage area contained a nuclear warhead.3

Sensory identification could then use the information to create virtual images (similar to the way architects and aircraft designers use three-dimensional computer-aided design [CAD] software), including the most likely internal workings of the target building, aircraft, or person so one could actually "look" inside and see the inner workings. A good example is Boeing's use of computer-aided three-dimensional interactive application (CATIA) for design of its new 777 aircraft. The "virtual airplane" was the first aircraft built completely in cyberspace (i.e., the first built entirely on computer so that engineers could "look at" it thoroughly before actually building it).4

This "imaging" could be carried one step further by techniques such as noninvasive magnetic source imaging and magnetic resonance imaging (MRI), which are now used in neurosurgical applications for creating an image of the actual internal construction of the subject.5 In fact, the numerous nonintrusive medical procedures now used on the human body might be extrapolated to extend to "long-range" sensing. The nuclear materials for these "structural MRIs" could be delivered by PGMs or drones and introduced into the ventilation system of a target building. The material would circulate throughout the structure and eventually be "sensed" remotely to display the internal workings of the structure.

Another extension of the concept of distance sensing would be the tracking of mitochondrial DNA found in human bones. DNA technology is currently being used by the US Army's Central Identification Laboratory for identifying war remains.6 If this technique could be used at a distance, the tracking of human beings becomes conceivable. By extrapolating such techniques from medicine, one could generate endless possibilities.

Further, a mass spectrometer that ionizes samples at ambient pressure using an efficient corona discharge could detect vapors and effluent liquids associated with many manufacturing processes.7 This technique is currently found in state-of-the-art environmental monitoring systems. There are also spectrometers that can analyze chemical samples through glass vials. Applying this technology from a distance and collating all the data will be the follow-on third- and fourth-order applications of this concept.

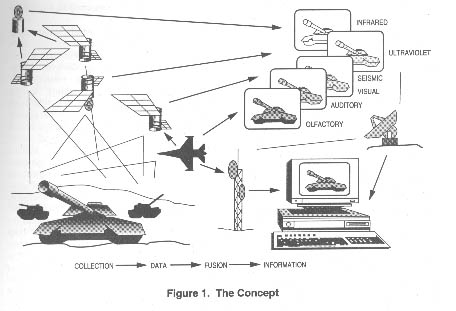

Another technology that would aid the identification of airborne subjects would be the National Aeronautics and Space Administration's (NASA) new airborne in-situ wind-shear detection algorithm.8 Although designed to detect turbulence, wind shear, and microburst conditions, this technology could be extrapolated to detect aircraft flights through a given area (perhaps by using some sort of detection net for national or point defense). This technique, coupled with observing disturbances in the earth's magnetic field, vortex-detection tracking of CO2 vapor trails, and identifying vibration and noise signatures, would create a sensory

signature that could be compared to a database for classification (fig. 2). The overall system would accumulate sensing data from a variety of sources, such as drone- or cruise-missile-delivered sensor darts, structural listening devices, space-based multispectral sensing, weather balloons, probes, airborne sound buoys, unmanned aerial vehicles (UAV), platforms such as airborne warning and control system (AWACS) and joint surveillance target attack radar system (JSTARS) aircraft, land radar, ground sensors, ships, submarines, surface and subsurface sound-surveillance systems, human sources, chemical and biological information, and so forth. The variety of sensing sources would serve several functions. First, spurious inputs could be "kicked out" of the system or given a lesser reliability value, much like the comparison of data from an aircraft equipped with a triple inertial navigation system when there is a discrepancy among separate inputs. Another important factor in handling a variety of inputs is that the system is harder to defeat when it does not rely on just a few key inputs. Finally, inputs from other nations and the commercial sector may be used as additional elements of data. Just as the current Civil Reserve Air Fleet (CRAF) system requires certain modifications for commercial aircraft to be used for military purposes in times of national emergency, so might commercial satellites contain subsystems designed to support the system envisioned above. In such a redundant system, failure to receive some data would not have a significant debilitating impact on the system as a whole.

To fuse and compare data, processors could take advantage of common neural-training regimens and pattern-recognition tools to sort data received from sensor platforms. Some of the data-fusion techniques we envision would require continued advancement in the world of data processing-a capability that is growing rapidly, as noted by Dr Gregory H. Canavan, chief of future technology at Los Alamos National Laboratory:

Frequent overflights by numerous satellites add the possibility of integrating the results of many observations to aid detection. That is computationally prohibitive today, requiring about 100 billion operations per second, which is a factor 10,000 greater than the compute rate of the Brilliant Pebble and about a factor of 1,000 greater than that of current computers. However, for the last three decades, computer speeds have doubled about every two years. At that rate, a factor of 1,000 increase in rate would only take about 20 years, so that a capability to detect and track trucks, tanks, and planes from space could become available as early as 2015.9

Dr Canavan also suggested that development time could be reduced even further by using techniques such as parallel computing and external inputs to reduce required computation rates.10 The point is that with conservatively forecast advancements in computer technology, the ability to gather and synthesize vast amounts of data will permit significant enhancements in remote sensing and data fusion.

Using Space

As envisioned, this concept would be supported by systems in all operational media-sea, ground (both surface and subsurface), air, and space. However, space will play the critical role in this conceptual architecture. Although the system would rely on data from many sources other than space, using this medium as a primary source of data for sensing and fusing has definite advantages. Space allows prompt, wide-area coverage without the constraints imposed by terrain, weather, or political boundaries. It can provide worldwide or localized support to military operations by providing timely information for such functions as target development, mission planning, combat assessment, search and rescue (SAR), and special-forces operations.

Sensing and Data Fusion

The overall concept can be divided

into three parts: the sensing phase, which uses ground-, sea-, air-, and

space-based sensors; the data fusion phase, which produces information

from raw data; and the dissemination phase, which delivers information

to the user. This article examines the first two parts.

Sensing

The five human senses are used here as a metaphor for the concept of sensing described above. Although this representation is not precise, at least it provides a convenient beginning point for our investigation. For instance, human sensing capabilities are often inferior to those of other forms of life (e.g., the dog's sense of smell or the eagle's formidable eyesight).

Tremendous strides have been made in the sensing arena. However, some areas are more fully developed than others. For example, more advances have occurred in optical or visual sensing than in olfactory sensing. This article not only examines the more traditional areas of reconnaissance such as multispectral technology, but also discusses interesting developments in some unique areas. An exciting aspect is the discovery of research being conducted in the commercial realm, whose specific tasks require specific technologies and whose techniques have not yet been fully investigated for military uses.

The sensing areas examined here are (1) visual (including all forms of imaging, such as IR, radar, hyperspectral, etc.), (2) acoustic, (3) olfactory, (4) gustatory, and (5) tactile. There are two keys to this metaphoric approach to sensing. First, it unbinds the traditional electromagnetic orientation to sensing. Second, it provides a way of showing how all these sensors will be fused to allow fast, accurate decision making, such as that provided by the human brain.

Visual Sensing and Beyond. As mentioned above, remote image sensing has received a tremendous amount of attention, both in military and civilian communities. The intention here is not to reproduce the vast amount of information on this subject but to describe briefly the current state of the art and to highlight some of the more innovative concepts from which we can step forward into the future. We will not discuss the imaging capabilities of the United States, other than to emphasize that they will need to be replaced or upgraded to meet the needs of the nation in 2020. The technologies and applications discussed below pave the way for these improvements.

Multispectral imaging (MSI) provides spatial and spectral information. It is currently the most widely used method of imaging spectrometry. The US-developed LANDSAT, French SPOT, and Russian Almaz are all examples of civil/commercial multispectral satellite systems that operate in multiple bands, provide ground resolution on the order of 10 meters, and support multiple applications. Military applications of multispectral imaging abound. The US Army is busily incorporating MSI into its geographic information systems for intelligence preparation of the battlefield or "terrain categorization" (TERCATS). The Navy and Marines use MSI for near-shore bathymetry, detection of water depths of uncharted waterways, support of amphibious landings, and ship navigation. MSI data can be used to help determine "go-no-go" and "slow-go" areas for enemy and friendly ground movements. By eliminating untrafficable areas, this information can be especially useful in tracking relocatable targets, such as mobile short-range and intermediate-range ballistic missile launchers. By using MSI data in the radar, IR, and optical bands, one can more quickly discern environmental damage caused by combat (or natural disasters). For example, LANDSAT imagery helped determine the extent of damage caused by oil fires set by the Iraqis in Kuwait during the Gulf War.11

Although MSI has a variety of applications and many advantages, use of this sensing technique results in a decrease of both bandwidth and resolution from conventional spectrometry. Additionally, multispectral systems cannot produce contiguous spectral and spatial information. We must overcome these disadvantages if we are to meet the surveillance and reconnaissance needs of the war fighter and commander of 2020.

One promising technology for overcoming these shortfalls is hyperspectral sensing, which can produce thousands of contiguous spatial elements of information simultaneously. This would allow a greater number of vector elements to be used for such things as achieving a higher certainty of space-object identification. Although hyperspectral models do exist, none have been optimized for missions from space or have been integrated with the current electro-optical, IR, and radar-imaging technologies.

This same technology can be equally effective for ground-target identification. Hyperspectral sensing can use all portions of the spectrum to scan a ground target or object, collect bits of information from each band, and fuse the information to develop a signature of the target or object. Since only a small amount of information may be available in various bands of the spectrum (some bands may not produce any information), the process of fusing the information and comparing it to data obtained from other intelligence and information sources becomes crucial.

Several war-fighting needs exist for a sensor that would provide higher fidelity and increased resolution to support, for example, US Space Command (USSPACECOM) and its components' missions of space control, space support, and force enhancement. In addition to the aforementioned examples of object identification in deep space (either from the ground or a space platform), identification of trace atmospheric elements, and certain target-identification applications, requirements also exist in the following areas: debris fingerprints, damage assessment, identification of space-object anomalies (ascertaining the condition of deep-space satellites), spacecraft interaction with ambient environment, terrestrial topography and condition, and verification of environmental treaties.

Currently under development are several technologies that can be integrated into hyperspectral sensing to further exploit ground- and space-object identification. Two promising technologies include remote ultralow light-level imaging (RULLI) and fractal image processing. RULLI is an initiative by the Department of Energy to develop an advanced technology for remote imaging using illumination as faint as starlight.12 This type of imaging encompasses leading-edge technology that combines high-spatial resolution with high-fidelity resolution. Long exposures from moving platforms become possible because high-speed image-processing techniques can be used to de-blur the image in software. RULLI systems can be fielded on surface-based, airborne, or space platforms, and-when combined with hyperspectral sensing-can form contiguous, continuous processing of spatial images using only the light from stars. This technology can be applied to tactical and strategic reconnaissance, imaging of biological specimens, detection of low-level radiation sources via atmospheric fluorescence, astronomical photography in the X-ray, ultraviolet (UV), and optical bands, and detection of space debris. RULLI depends on a new detector-the crossed-delayed line photon counter-to provide time and spatial information for each detected photon. However, by the end of fiscal year 1996, all technologies should be sufficiently developed to facilitate the design of an operational system.

The task of finding mobile surface vehicles requires rapid image processing. Automated preprocessing of images to identify potential target areas can drastically reduce the scope of human processing and provide the war fighter with more timely target information. Hyperspectral sensing can aid in quickly processing a large number of these images on board the sensing satellite, in identifying those few regions with a high probability of containing targets, and in downlinking data subsets to analysts for visual processing. Although fractal-like backgrounds can be defeated by cloud/smoke cover or camouflage, fractal image processing-if fused with information from other sensory sources-can help the analyst or the processing software identify ground-based signatures.13

Hyperspectral sensing offers a plethora of opportunities for deep-space and ground-object identification and characterization to support the war fighter's space-control-and-surveillance mission, remote sensing of atmospheric constituents and trace chemicals, and enhanced target identification. Collecting and fusing pieces of information from each band within the spectrum can provide high-fidelity images of ground or space-based signatures. Moreover, when combined with fused data from other sensory and nonsensory sources, hyperspectral sensing can provide target identification that no single surveillance system could ever provide. Thus, the war fighter has a much improved picture of the battle space-anywhere, anytime.

Acoustic Sensing. When matter within the atmosphere moves, it displaces molecules and sends out vibrations or waves of air pressure that are often too weak for our skin to feel. Waves of air pressure detected by the ears are called sound waves. The brain can tell what kind of sound has been heard from the way the hairs in the inner ear vibrate. Ears convert pressure waves passing through the air into electrochemical signals which the brain registers as a sound. This process is called acoustic sensing.

Electronically based acoustic sensing is not very old. Beginning with the development of radar prior to World War II, applications for acoustic sensing have continued to grow and now include underwater acoustic sensing (i.e., sonar), ground and subterranean-based seismic sensing, and the detection of communications and electronic signals from aerospace. Electromagnetic sensing operates in the lower end of the electromagnetic spectrum and covers a range from 30 hertz (Hz) to 300 gigahertz. Acoustic sensors have been fielded in various mediums, including surface, subsurface, air, and space. Since the advent of radar, most applications of acoustic sensing have been pioneered in the defense sector. Developments in space-based acoustic sensing in the Russian defense sector have recently become public. According to The Soviet Year in Space, 1990,

Whereas photographic reconnaissance satellites collect strategic and tactical data in the visible portion of the electromagnetic spectrum, ELINT satellites concentrate on the longer wavelengths in the radio and radar regions. . . . Most Soviet ELINT satellites orbit the earth at altitudes of 400 to 850 kilometers, patiently listening to the tell-tale electromagnetic emanations of ground-based radars and communications traffic.14

It is believed that the Russians use this space-based capability to monitor changes in the tactical order of battle, strategic defense posture, and treaty compliance.

On the ground, the United States used different kinds of acoustic sensors during the Vietnam War. The first one was derived from the sonobuoy developed by the US Navy to detect submarines. The USAF version used a battery-operated microphone instead of a hydrophone to detect trucks or even eavesdrop on conversations between enemy troops. The air-delivered seismic detection (ADSID) device was the most widely used sensor. It detected ground vibrations by trucks, bulldozers, and the occasional tank, although it could not differentiate with much accuracy between vibrations made by a bulldozer and a tank.15

Numerous examples of applications of acoustic sensing are found in the civil sector. In the United States, acoustic sensors that operate in the 800-900 Hz range are now being developed to help detect insects. Conceivably, these low-volume acoustic sensors could be further refined, either to work hand in hand with other spectral sensors or by themselves to classify insects and other animals, based on noise characteristics.16

Sandia National Laboratory in New Mexico has made progress in using acoustic sensors to detect the presence of chemicals in liquids and solids. In the nonlaboratory world, these acoustic sensing devices could be used as real-time environmental monitors to detect contamination, either in ground water or soil, and have both civil (e.g., natural-disaster assessment) and military (e.g., combat-assessment) applications.17

An additional development in the area of acoustic sensing involves seismic tomography to "image" surface and subsurface features. Seismic energy travels as an elastic wave that both reflects from and penetrates through the sea floor and structure beneath-as if we could see the skin covering our faces and the skeletal structure beneath at the same time. Energy transmitted through the earth's crust can also be used to construct an image.18

In summary, acoustic sensing offers great potential for helping the war fighter, commander, and war planner of the twenty-first century solve the problems of target identification and classification, combat assessment, target development, and mapping. For acoustic sensing from aerospace, a primary challenge appears to be in boosting noise signals through various mediums. Today, this is accomplished by using bistatic and multistatic pulse systems. In the year 2020, assuming continued advances in interferometry, the attenuation of electromagnetic "sound" through space should be a challenge already overcome, thus permitting very robust integration of acoustic sensing with other remote-sensing capabilities from aerospace.

A more serious challenge in defense-related acoustic sensing may come from enemy countermeasures. As operations and communications security improve, space-based acoustic sensing will become increasingly more difficult. Containing emissions within a shielded cable or-better yet-a fiber-optic cable makes passive listening virtually impossible. The challenge for countries involved with space-based acoustic programs is to develop improved countermeasures to overcome these technological advancements. In the year 2020, remote acoustic sensing from space and elsewhere will be a critical element for developing accurate structural signatures as well as for assessing activity levels within a target. New methodologies for passive and active sensing need to be developed and should be coupled with other types of remote sensing.

Olfactory Sensing. Although this sense is somewhat "exotic" today, since the mid-1980s there has been a resurgence of research into the sense of smell. Both military as well as civilian scientists have aimed their efforts at first identifying how the brain determines smell and determining how science can replicate the process synthetically. The results of these efforts are impressive. An electronic "sniffer" for analyzing odors needs two things: (1) the equivalent of a nose to do the smelling and (2) the equivalent of a brain to interpret what the nose smelled. A British team employed arrays of gas sensors made of conductive polymers working at room temperature. An electrical current passes through each sensor. When odor emissions collide with the sensors, the current changes and responds uniquely to different gases. The next step entailed synthesizing the various currents into a meaningful pattern. A neural net (a group of interlined interconnected microprocessors that simulate some basic functions of the brain) identified the patterns. The neural net was able to learn from experience and did not need to know the exact chemistry of what it was smelling. It could recognize changing patterns, giving it a unique ability to detect new or removed substances.19

Swedish scientists took this a major step further. Their development of a light-scanned, seam-conductor sensor shows great promise in the area of long-range sensing. This sensor is coated with three different metals: platinum, palladium, and iridium, which are heated at one end to create a temperature gradient. This process allows the sensor to respond differently to gases at every point along its surface. The sensor is read with a beam of light that generates an electrical current across the surface. When fed into a computer, the current produces a unique image of each smell, which is then compared to a database to determine the origin of the smell.20

Despite these impressive findings, present technology requires the gases to come in contact with the sensor. The next step is to fuse the sensory capabilities into a sort of particle beam that-when it comes into contact with the odors-would react in a measurable way. Similar to the way radar works, beam segments would return to the processing source, and the object from which the odors emanated would be identified. This process could be initiated from space, air, or land and would be fused with other remote-sensing capabilities to build a more complete picture. Studies on laser reflection demonstrate the ability to correct for errors induced by moving from the atmosphere to space. There is every reason to believe that the next couple of decades will produce similar capabilities for particle beams. The ability to fuse odor sensors within these beams and receive the reactions for processing may also be feasible in the prescribed time frame.

Gustatory Sensing. Another area that has not received a tremendous degree of attention is the sense of taste. In many ways, ideas concerning the sense of taste may sound more like those concerning the sense of smell. The distinction is that the sample tasted is part of (or attached to) a surface of some sort. The sense of smell relies on airborne particles to find their way to receptors in the nose. The study of taste makes frequent reference to smell-probably due to similar mechanisms whereby the molecules in question come in contact with the receptor (be they smell or taste receptors).

Taste, in and of itself, will probably not be a prime means of identification. It can, however, be one of the discriminating bits of information that can aid in identifying ambiguous targets identified by other systems. It also provides another characteristic that must be masked or spoofed to truly camouflage a target. Taste could be used to detect silver paint that appears to be aluminum aircraft skin on a decoy. It could be used to "lick" the surface of the ocean to track small, polluting craft. It could even be used to taste vehicles for radioactive fallout or chemical/biological surface agents. We could detect contamination before sending ground troops into an area. By putting a particular flavor on our vehicles, we may be able to develop a taste version of identification friend or foe (IFF).

The sense of taste provides the human brain with information on characteristics of sweetness, bitterness, saltiness, and sourness. The exact physiological mechanism for determining these characteristics is not yet completely understood. Theory has it that sweet and bitter are determined when molecules of the substance present on the tongue become attached to "matching" receptors. The manner in which the molecules match the receptors is believed to be a physical interlocking of similar shapes-much the same way that pieces of a jigsaw puzzle fit together. Once the interlocking takes place, an electrical impulse is sent to the taste center in the brain. It is not known whether there are thousands of unique taste receptors (each sending a unique signal) or if there are only a few types of receptors (resulting in many unique combinations of signals). Experts think that saltiness and sourness are determined in a different manner. Rather than attaching themselves to the receptors, these tastes "flow" by the tips of the taste buds, exciting them directly through the open ion channels in the tips.21

To make a true bitter/sweet taste sensor in space would require technology permitting the transmission of an actual particle of the object in question. This ability appears to be outside the realm of possibility in the year 2020. An alternative would be to scan the object in question with sufficient "granularity" to determine the shape of the individual molecules and then compare this scanned shape with a catalog of known shapes and their associated sweet or bitter taste. Such technology is currently available in the form of various types of scanning/tunneling electron microscopes. The shortcoming of these systems is that they require highly controlled atmospheres and enclosed environments to permit accurate beam steering and data collection. The jump to a "remote electron microscope" also may not be possible by 2020.

An alternate means of determining surface structure remotely involves increasing the distance from which computerized axial tomography (CAT) scans or nuclear magnetic resonance (NMR)22 are conducted. Although current technology requires rather close examination (on the order of several inches), at least a portion of the "beam" transmission takes place in the normal atmosphere. Extrapolation of this capability seems to offer the possibility of scanning from increased distances.

To perform a taste scan from space to determine the sweetness/bitterness of an object will require continued research and a truly great increase in technology. Taste research must continue, and the mechanics of taste must be fully understood. The product of this research would be a database that catalogs the appropriate characteristics of molecules related to taste. Without understanding how taste works, we could not produce a properly designed scanner.

Remote scanning is a great challenge that involves getting the beam to the targeted object and capturing the reflected beam pattern to determine the surface shape at the molecular level. Getting the beam to the target entails the generation, aiming, and power of the beam.

The scanning beam (of whatever type provides the desired granularity) must be generated with sufficient power to reach the target with enough energy to reflect a detectable and measurable pattern for collection and subsequent analysis. Both beam generators and collectors would be located (not necessarily co-located) in space (most likely in low earth orbit-the generators, at least). Maximum distance from generator to target is probably on the order of 1,000 to 1,500 miles or the slant range from a 300-to-400-mile LEO to the line-of-sight horizon. Target-to-collector distances would be, at a minimum, the same as those from generator to target (if collection is accomplished in LEO), to a maximum of 25,000 miles (if collection occurs in geosynchronous orbit).

To ensure the gathering of proper data, we must aim and focus the beam exactly at the desired target. Aiming will require compensation for atmospheric inconsistencies. Specifically, we would fire a laser into the atmosphere to detect anomalies along the general path of the actual beam and compensate accordingly. Refined focusing on the targeted areas should be on the order of no more than one or two square feet.

We must also consider what might happen when the beam (of whatever type) hits the target area. Will its power be so great that the target would be burned or damaged? Further, will adversaries be able to detect scanning in the target area? These are some of the challenges we must overcome in order to bring the taste sensor to reality.

Capturing the reflected beam also poses a significant challenge. The general technique for analyzing objects with scanning methods calls for a beam from a known location and of known power to "illuminate" the targeted object. Since the surface of the object is irregular, the beam reflects in various directions. Thus, the object must be surrounded by collectors to ensure the collection of all reflected energy. By noting the collector and the portion of the beam collected, we can reconstruct the surface that reflects the beam.

Since it is impossible to surround the earth completely with a single collecting surface, a large number of platforms must serve as collectors. All platforms would focus their collectors on the targeted area and compensate in a manner similar to aiming compensation for the beam generator (see above). Any platform with line of sight directly to the target would be suitable for collection. Platforms "below" the horizon but able to capture reflected energy in a manner similar to the over-the-horizon backscatter (OTHB) radar system would also be acceptable. With appropriate algorithms and beam selection, the entire sensor constellation could conceivably be available for collection all the time.

Fusing of the reflected data from a single "taste" would take place on a central platform, probably in geosynchronous orbit. Information about the taste measurement would include scanning-beam composition, pulse-coding data, firing time, location of beam generator, aiming compensation data, focusing data, location of targeted area, collector position, collector compensation data, and actual collected data including time. Because we are collecting only a fraction of the "reflected energy" from scanning beams, we need all this information in order to know which part of the "taste signature" we have put together.

Tactile Sensing. Potential exists for the development of an earth-surveillance system using a tactile sensor for mapping and object determination. Rather than viewing and tracking items of interest optically, objects could be identified, classified, and tracked via tactile stimulation-and-response analysis. This method of surveillance has advantages over optical viewing in that it is unaffected by foul weather, camouflage, or other obscuration techniques.

Tactile sense provides humans awareness of contact with an object. Through this sense, we learn the shape and hardness of objects, and-by using our cutaneous sensors-we receive indications of pressure, warmth, cold, and pain. A man-made tactile sensor emulates this human sense by using densely arrayed elementary force sensors (or taxels), which are capable of image sensing through the simultaneous determination of an object's force distribution and position measurements.23

Recent advances in tactile sensor applications have appeared in the areas of robotics, cybernetics, and virtual reality. These simple applications attempted to replicate the tactile characteristics of the human hand. One project, the Rutgers Dexterous Hand Master, combines a mechanical glove with a virtual-reality scenario to allow an operator to "feel" virtual-reality images. This research has advanced the studies of remote-controlled robots that could be used in such ventures as construction of a space station or cleaning up a waste site.24

The challenge lies in developing tactile sensors that are capable of remotely "touching" an object to determine its characteristics. This challenge elicits visions of a large, gloved hand reaching out from space to squeeze an object to determine if it is alive. We can develop this analogy by expanding the practical concept of radar.

Radar is a radio system used to transmit, receive, and analyze energy waves to detect objects of interest or "targets." In addition, it can determine target range, speed, heading, and relative size. One possible way to identify tactile characteristics of an interrogated target is to analyze the radar returns and compare them to known values. When a radio wave strikes an object, a certain amount of its energy reflects back toward the transmitter. The intensity of the returned energy depends upon the distance to the target, the transmission medium, and the composition of the target. For example, energy reflected off a tree exhibits characteristics different from those of energy reflected off a building (because a tree absorbs more energy). By analyzing the energy returns, we could conceivably determine target characteristics of shape, temperature, and hardness by comparing the returns to known values. By using virtual reality, we could then transform the tactile characteristics of various objects interrogated in an area of surveillance into a three-dimensional graphical representation.

The significant value of tactile sensor technology lies not in the development of a replacement for current surveillance sensors but in the prospect for gaining unique information. A typical surveillance radar provides the "when, where, and how" for a particular target, but a tactile sensor adds the "what" and, potentially, the "who."

Countermeasures to Sensing. Once an adversary perceives a threat to his structure and system, he usually develops and employs countermeasures. The concepts for sensing presented here, albeit rooted in leading-edge technology, are not exempt from enemy countermeasures. Potential enemy countermeasures in the year 2020 include killer antisatellites (ASAT), jamming, and ground-station attacks. Target-protection countermeasures include concealment, camouflage, and deception (CC&D) and operations security (OPSEC). Technical experts must address these threats and provide countermeasures early in the design phase of this sensing system.

Active and passive systems can overcome jamming, ground-station attack, and enemy OPSEC. Frequency hopping and "hardening" of space links are both effective in countering jamming. If hopping rates-which currently exceed 3,000 hops per second-continue to increase exponentially, many forms of jamming will become minor irritants. We can overcome ground-station attack by improving physical security and replicating critical nodes. Such redundancy can be expensive, but if we incorporate it early in the design phase, it can be efficient and cost- effective. But the best way to counter enemy OPSEC is through passive measures such as security training, HUMINT, and reduction of the number of people who "need to know" and through active measures such as HUMINT and exploitation of the omnisensorial capability of this system.

Killer ASAT and CC&D capabilities are much more difficult and costly to counter. Decoy satellites and redundant space-based systems can be effective. However, we must pursue some cost-effective means of hardening in order to ensure the survivability of our space systems.

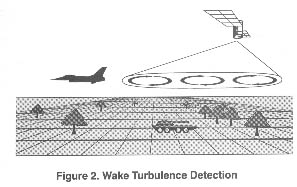

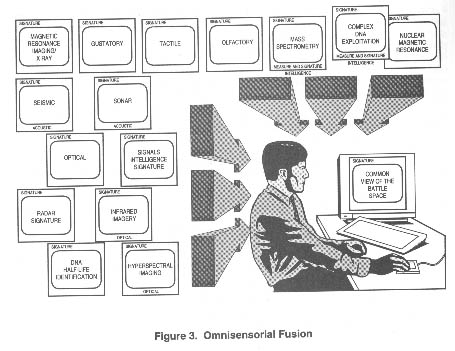

Data Fusion

Fusion of all the information collected from the various sensors mentioned above is the key to making this data useful to the war fighter (fig. 3). Without the appropriate fusion process, the war fighter will be the victim of information overload, a condition that is not much better-and is sometimes worse-than no information at all. The goal of this initiative is to fuse vast amounts of data from multiple sources in real time and make it available to the war fighter on demand.

Today, we are able to collect data from a variety of sensor platforms (e.g., satellites, as well as air-breathing and HUMINT sources, etc.). What we are not able to do, however, is fuse large amounts of data from multiple sources in near real time. We have what amounts to "stovepipe" data-that is, data streams that are processed independently. As we discovered in Operation Desert Storm, deficiencies exist in sharing and relating intelligence from different sources. Consequently, the war fighter is not able to see the whole picture-just bits and pieces.

Today, sensor data is capable of drowning us. The sheer volume of this data can cripple an intelligence system:

Over 500,000 photographs were processed during Operation Desert Storm. Over its 14-year lifetime, the Pioneer Venus orbiter sent back 10 terabits (10 trillion bits) of data. Had it performed as designed, the Hubble Space Telescope was expected to produce a continuous data flow of 86 billion bits a day or more than 30 terabits a year. By the year 2000, satellites will be sending 8 terabits of raw data to earth each day. (Emphasis in original)25

As staggering as these figures are, the computing power on the horizon may be able to digest this much data. The Advanced Research Projects Agency (ARPA) is sponsoring the development of a massive, parallel computer capable of operating at a rate of 1 trillion floating-point operations per second (1 tera FLOPS). Parallel processing employs multiple processors used to execute several instruction streams concurrently and significantly reduces the amount of time required to process information.

Once the data is processed into usable information or intelligence, the next requirement becomes a means of storing and retrieving a huge database or library. "Advances in storage technology in such media as holography and optical storage will doubtlessly expand these capacities."26 An optical tape recorder capable of recording and storing more than a terabyte of data on a single reel is under development.

Vertical block line (VBL) technology offers the possibility of storing data in nonvolatile, high-density, solid-state chips. This magnetic technology offers inherent radiation hardness, data erasability and security, and cost-effectiveness. Compared to magnetic bubble devices, VBL offers higher storage density and higher data rates at reduced power. VBL chips could achieve (volumetric) storage densities ranging from one gigabit to one terabit per cubic centimeter. Chip data rates, a function of chip architecture, can range from one megabit per second to 100 megabits per second. Produced in volume, chips are expected to cost less than one dollar per megabyte.

If we are to provide the user real-time, multisource data in a usable format, leaps in data-fusion technologies must occur. A new technology-the photonic processor-could increase computational speeds exponentially. The processing capabilities and power requirements of current fielded and planned electronic processors are determined almost solely by the low-speed and energy-inefficient electrical interconnections in electronic boards, modules, or processing systems. Processing speeds of electronic chips and modules can exceed hundreds of megahertz, whereas electrical interconnections run at tens of megahertz due to limitations in standard transmission lines. More significantly, the majority of power consumed by the processor system is used by the interconnection itself. Optical interconnections, whether in the form of free-space, board-to-board busses or computer-to-computer fiber-optic networks, consume significantly less electric power, are inherently robust with regard to electromagnetic impulses (EMI) and electromagnetic pulses (EMP), and can provide large numbers of interconnection channels in a small, low-weight, rugged subsystem. These characteristics are critically important in space-based applications.27

This technology of integrating electronics and optics reduces power requirements, builds in EMI/EMP immunity, and increases processing speeds. Though immature, the technology has great potential. If it were possible to incorporate photonic-processing technologies into a parallel computing environment, increases of several orders of magnitude in processing speeds might occur.

The fusing of omnisensorial data will require processing speeds equal to or greater than those mentioned above. Onboard computer (OBC) architectures will use at least three computers that perform parallel processing; they will also feature a voting process to ensure that at least two of the three OBCs agree. The integration of neural networks in OBC systems will provide higher reliability and will enhance process-control techniques.

Change detection and pattern recognition as well as chaos modeling techniques will increase processing speeds and reduce the amount of data to be fused. Multiple sensors, processing their own data, can increase processing speeds and share data between platforms through cross-queuing techniques. Optical data-transmission techniques should permit high data throughputs to the fusion centers in space, on the ground, and/or in the air.

The National Information Display Laboratory is investigating technologies that would aid in the registration and deconfliction of omnisensorial data, data fusion, and image mosaicking (the ability to consolidate many different images into one). "Information-rich" environments made accessible by the projected sensing capabilities of the year 2020 will drive the increasing need for georeferenced autoregistration of multisource data prior to automated fusion, target recognition/identification, and situation assessment. Image mosaicking will enhance the usability of wide-area, imagery-based products. Signals, multiresolution imagery, acoustical data, analyzed sample data (from tactile/gustatory sensing), atmospheric/exoatmospheric weather data, voice, video, text, and graphics can be fused in an "infobase" that provides content- and context--based access, selective visualization of information, local image extraction, and playback of historical activity.28

Near-term Technologies and Operational

Exploitation Opportunities

Pursuit of nonmilitary omnisensorial

applications in the early stages of development could provide a host of

interested partners, significantly reduce costs, and increase the likelihood

of congressional acceptance. These applications include government uses,

consumer uses, and general commercial uses.

Government uses of this capability could include law enforcement, environmental monitoring, precise mapping of remote areas, drug interdiction, and assistance to friendly nations. The capability to see inside a structure could prevent incidents such as the one that occurred at the Branch Davidian compound in Waco, Texas, in 1993. Drug smuggling could be detected by clandestinely subjecting suspects to remote sensing. Friendly governments could receive real-time, detailed intelligence of all insurgency/terrorist operations in their countries. Finally, just as we presently track the migratory patterns of birds by using LANDSAT multispectral imagery, so might we track the spread of disease to allow early identification of infected areas.

Consumer uses could range from providing home security to monitoring food and air quality. Home detection systems would be cheaper and more capable. Not only would they be able to sense smoke and break-ins, but also gas leaks and seismic tremors. They could also provide advance warning of flash floods and other imminent natural disasters. Further, sensors could identify spoiled food and test the air for harmful particles.

Commercial uses could include enhanced airport security, major advances in medicine, and a follow-on to the air traffic control system. Everyone from farmers to miners would benefit from remote sensors by no longer having to rely on trial and error. For example, aircraft scans prior to takeoff would provide new levels of safety. Further, scans of patients would effectively eliminate exploratory surgery because doctors would be able to view internal problems on computer screens. Eventually, doctors may be able to treat patients largely on the basis of information obtained from computer imaging.

A good example of a commercial application is the development of an aerospace traffic location and sensing (ATLAS) system analogous to the current air traffic control system. Space is a hazardous environment because of the accumulation of satellites, debris, and so forth, and will be even more hazardous by 2020. Flying in space without an "approved" flight plan, particularly in LEO, is especially risky. The space shuttle, for instance, occasionally makes unplanned course corrections in order to avoid damage from debris. Similarly, as the boundaries between space and atmospheric travel become less distinct (witness the existence of transatmospheric vehicles), this system could conceivably integrate all airborne and space-transiting assets into a seamless, global, integrated system.

This system envisions that some of the same satellites used as part of the integrated structural sensory signature system would also be used for ATLAS. It would require only a small constellation of space-surveillance satellites (fewer than 20) orbiting the globe. ATLAS satellites would carry the same omnisensorial packages capable of tracking any object in space larger than two centimeters. All satellites deployed in the future would be required to participate in the ATLAS infrastructure and would carry internal navigation and housekeeping packages, perform routine station-keeping maneuvers on their own, and constantly report their position to ATLAS satellites. Only anomalous conditions (e.g., health and status problems, collision threats, etc.) would be reported to small, satellite-specific ground crews. ATLAS ground stations (primary and backup) would be responsible for handling anomalous situations, coordinating collision-avoidance maneuvers with satellite owners, authorizing corrective maneuvers, and coordinating space-object identification (particularly threat identification). The satellite constellation would be integrated via cross-links, allowing all ATLAS-capable satellites to share information. The aerospace traffic control system of the future would eliminate or downsize most of the current satellite-control ground stations as well as the current ground-based space-surveillance system.

Elements of the ATLAS system will include improved sensors for tracking space objects (including debris and maneuvering targets), software to automatically generate and deconflict tracks and update catalogs, and an analysis-and-reporting "back end" that will provide surveillance and intelligence functions as needed. Air and space operators would have a system that would allow them to enter a flight plan and automatically receive preliminary, deconflicted clearance. In addition, ongoing, in-flight deconfliction and object avoidance would also be available without operator manipulation. The system could integrate information from even more sophisticated sensors of the future, such as electromagnetic, chemical, visible, and omnispectral devices. "Handoffs" from one sector to another would occur, but only in the onboard ATLAS brain, which would be transparent to the operator. ATLAS provides a vision of a future-generation smart system that integrates volumes of sensory information and fuses it into a format thatgives operators just what they need to know, when they need to know it.

The ATLAS system is just one small commercial application of the comprehensive structural sensory signature concept that fuses data from a variety of sophisticated sensors of all types to provide the war fighter of tomorrow with the right tools to get the job done.

Conclusion

The precision that technology offers

will change the face of warfare in the year 2020 and beyond. Future wars

will rely not so much on sea, land, or airpower as on information. The

victor in the war for information dominance will most likely be successful

in the battle space. The key to achieving information dominance will be

the technology employed in the area of surveillance and reconnaissance,

particularly a "sensor-to-shooter" system that will enable "one shot, one

kill" combat operations. A network of ground- and space-based sensors that

mimic the human senses, together with hyperspectral and fractal imagery,

provides a diverse array of surveillance information that-when processed

by intelligent, robust neural networks-not only can identify objects with

a high degree of reliability, but also give the war fighter the sensation

of being in or near the target area. The challenge for decision makers

will be to develop a strategy that can turn this vision into reality.&127;